Multitouch Table Exhibit with Audio Layer Prototype

As I mentioned in my previous post, Open Exhibits Lead Developer, Charles Veasey and I attended a workshop at the Museum of Science in Boston this week that explored accessibility issues in computer-based exhibits. In the next few weeks, we will share a number of findings from the workshop, which was held as part of the NSF-sponsored Creating Museum Media for Everyone (CMME) project.

I want to start this process by sharing some of the findings from our breakout group, which over the course of a day-and-a-half, explored the challenges in creating audio descriptions for multitouch / multiuser exhibits. In particular, we looked at developing an assistive audio layer for a multitouch table exhibit.

Push Button Audio

For many years, kiosks have been made more accessible by adding audio descriptive layers, commonly activated by a push button. This feature has allowed blind, low-vision, and non-reading museum visitors to access content. At the Museum of Science in Boston most kiosks have standard set of buttons for descriptive audio with a “hearphone “– an audio handset, along with another set of buttons that are used for navigation.

Our group discussed the possibility of developing a similar system as an adjunct element on the side of a multitouch table or nearby the installation. However, this approach would essentially require developing an additional stand-alone, audio exhibit. Also, more importantly, the experience that visitors would have would be a fundamentally different than interacting directly on the table itself.

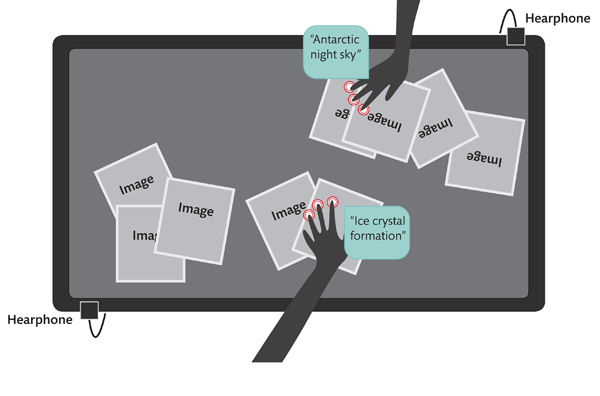

On a large multitouch table multiple visitors can interact simultaneously with physical multitouch gestures. The experience is both physical and social. By relegated a visitor to an audio button system, you are essentially isolating them from the more compelling qualities that are inherent in multitouch and multiuser exhibits.

Two Approaches

We looked at two possible approaches for integrating audio into a multitouch table. One involved using unique gestures to activate audio descriptions. The other approach involved the use of a fiducial device in the form of an “audio puck” to do the same.

Both approaches would make use of an introductory “station” and/or a familiar push-button “hearphone” to orient blind, low-vision, or non-reading users to the table and instruct them on how to either activate the audio via a unique gesture or how to use of the “audio puck.” This portion of the experience would be brief, but important to help the visitor understand how to use the audio descriptive layer.

You can see a PDF document our presentation here (114KB)

In the gesture-based approach, we decided to implement a gesture that would probably not be activated accidentally by visitors. A three-finger drag was used to provide short audio descriptions of the objects and elements found on the table. A three-finger tap activated a lengthier audio description and we discussed the possibility of a three-finger double-tap to activate even another layer. Most visitors interact with digital objects using either two-fingers or a whole hand, so there was less of a chance that either a two-point or a five-point gesture would trigger the audio accidentally.

Since these are unique gestures, the orientation to the exhibit is very important. Visitors need to know how to access the audio layer. While some gestures for audio standards have emerged on personal devices, they are not necessarily compatible with the interactions found in a multiuser space on a multitouch table. For example, our group member Dr. Cary Supalo, who is blind, uses an anchored two-finger swipe on his iPhone to activate audio (the thumb is stationary and the two-fingers swipe). However, that type of a gesture is similar to many others that are used to accomplish very different tasks. If we enabled this gesture on the table, visitors would likely, inadvertently, trigger the audio descriptions and/or not be able to interact in ways that are familiar to them.

As part of our group project, Charles programmed a protoptype exhibit using Open Exhibits Collection Viewer that allowed us to test the functionality of the three-fingered gesture approach. This rapid prototype demonstrated how the audio descriptive layer might work.

Along with being able to test the interaction, the prototype software allowed us to split the audio into two separate zones. There are ongoing concerns about the audio playback. Will the audio disrupt other visitors’ experience? How does the exhibit handle multiple, simultaneous audio streams? By using directional audio, we hoped to mitigate this issue. Unfortunately, due to some audio problems in the rapid prototyping process, the results were inconclusive; we will need to develop another prototype to better understand the dynamics of sound separation in the table environment and whether two simultaneous audio streams can work effectively in a museum setting.

In our second scenario, we imagined using an “audio puck “ that would work in a similar way to the gesture-activated approach. The group felt that in some ways this would be preferred option. Audio would probably not be set off accidentally. A button on the fiducial would allow users to click to get more thorough audio descriptions. Overall, it is a simpler and more direct approach for visitors. Also, importantly, the pucks would be of assistance to visitors with limited mobility and/or dexterity. Finally, you would only have as many simultaneous audio streams as you would have pucks on the table, so exhibit developers could easily limit the number of possible audio streams.

The drawbacks in this approach would be additional development time (in fiducial recognition and fabrication of the audio pucks) and limitations on some multitouch devices (such as IR overlays and projected capacitive screens). Also, whenever untethered physical objects appear in a busy museum environment there is a chance they will get lost, stolen, or damaged.

Conclusion

Multitouch and multiuser exhibits such as multitouch tables and walls are becoming much more common in museums and appear to be here to stay. At the same time, very little has been done in researching accessibility issues and providing tools to make these types of exhibits more accessible to individuals with disabilities. We have a lot of work to do in this area and this is just a start.

We are happy to report that most workshop attendees who saw the presentation and interacted with the rough prototype agreed that both approaches have potential. Obviously, more testing and development needs to happen, but we are now looking quite seriously at expanding our user-testing and eventually integrating a descriptive audio feature into the Open Exhibits framework. This would then be available to all Open Exhibits developers and could be configured in a variety of ways. We hope by making this feature available (along with guidelines for “getting started” with descriptive audio), the larger community might be able to make more progress. As always, we welcome your questions and feedback on this topic.

Acknowledgements and More About CMME

We had a fantastic group! Charles and I would like to acknowledge everyone who contributed: Michael Wall from San Diego Natural History Museum, Cary Supalo from Independent Science, and a great group from the Museum of Science, Boston that included: Juli Goss, Betty Davidson, Emily Roose, Stephanie Lacovelli, and Matthew Charron.

You can learn more about the NSF-funded Creating Museum Media for Everyone (CMME) on the Informal Science website. You can find a few pictures from the workshop on the Open Exhibits Flickr site (much more to come!). Also, take a look at Independence Science Access Blog, they wrote an article on the workshop as well, Dr. Supalo Consults with Boston Museum of Science to Develop Accessible Museum Exhibits.

We will continue to share materials, resources, and information from the project here on the Open Exhibits website. We plan on developing an entire section of the site devoted to the CMME project and to issues concerning accessibility and universal design. Many thanks to Principal Investigator Christine Reich, project manager Anna Lindgren-Streicher, and all of the amazing staff at the Museum of Science, Boston for organizing such an informative and exciting workshop.

by Jim Spadaccini ![]() on May 26, 2012

on May 26, 2012

No comment, questions. You say there is a lot of work to do. How close do you think you are to having a workable solution? What additional costs are expected to ensure accessibility compliance? And do you think the solution would be easily performed as a retrofit to an existing touch table?

Timothy. Happy to answer your questions here. We are still working out the details within the CMME project toward developing a workable solution. We are pretty confident we will be able to get the resources to do the necessary development. We hope to have this solution as part of the Open Exhibits framework and as a driver add-on by late Fall.

The system as we plan on implementing wouldn’t add any cost at the software level. Open Exhibits and the CMME project would release this software (free for educational use). There is a nominal cost if we used the “puck” fiducial” –the cost of the object itself. The idea is that the driver would work existing overlay technology. This is the technology that we (Ideum) use in our touch tables).

This would not be a universal solution for every touch table. Vision systems would need another “bridge” to take advantage of the audio layer. Also, with emerging project capacitive touch tables this would be harder, we would need to find yet another solution for those types of devices.

Hi – Will you be planning to offer the same content in the audio as you will do as text for sighted/reading users, that is, a parallel experience? Or is the idea to offer a separate experience, maybe a fuller description of the image as well as whatever accompanying text might be there to describe main ideas of the image? Your illustration seems to only show images on the table, not text, so I am not sure if you intended to have text in the images that was narrated.

Katie. In our prototype we did include descriptive text that included information about the images. The idea of audio layer would be that we would create a framework that would allow the developer to decide what type of text to provide.