Blog

Improved Papers and A New Bounty

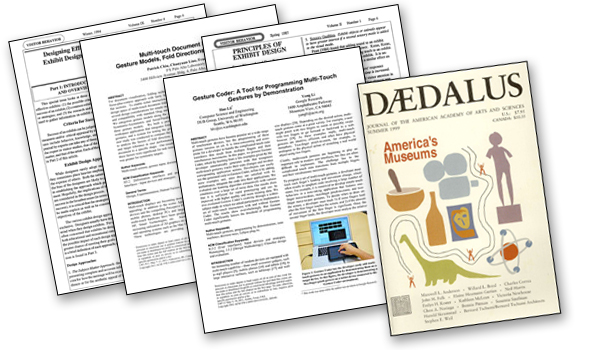

As we gear up for the release of Open Exhibits 2.5 later this year, we have expanded the Papers section of the website. Papers now includes a search function and better tagging to help you navigate and find what you are looking for. We have also added a new category, Exhibit Design, that can be used for papers, articles and web resources on the design of multitouch exhibits in any context. These resources may be recent or they may be older, canonical references that explain the underlying principles of creating effective multiuser exhibits.

A case in point is the newly added article Principles of Exhibit Design from the 1987 edition of the Journal of Visitor Behavior. Authors Stephen Bitgood and Don Patterson draw from historical research and foundational visitor studies to formulate a set of recommendations for designing user-centred exhibits. They cover the various aspects that impact on visitor experience including the content of the exhibit, social interaction between visitors and the placement of exhibits within busy gallery spaces. The principles they identify are timely and relevant to the challenges of designing multitouch applications today.

To kick things off, we are offering a $25.00 Amazon gift certificate! to an Open Exhibits member who can help us populate the new Exhibit Design category. There’s not much to it: Simply find at least 3 scholarly papers or articles and upload (or link) them to the newly-improved Papers section before September 30, 2012. Make sure to categorize and tag them correctly and you will receive your bounty! We would like to encourage all members to contribute to our growing library of resources for multitouch designers and developers.

Thinking Outside the Screen

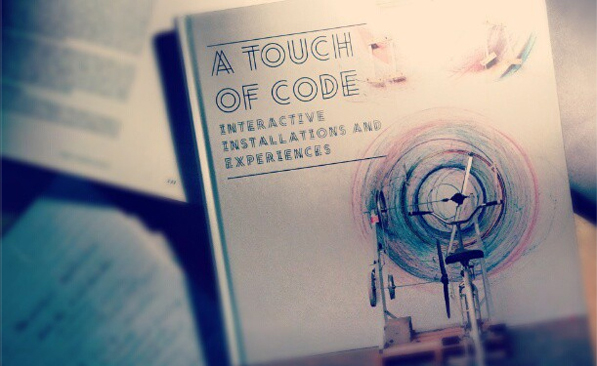

Last week I mentioned the prototype applications that we will be building with Open Exhibits during the next three weeks. To get the creative juices flowing, I checked out the newest addition to Ideum’s technical library. Published in 2011, A Touch of Code: Interactive Installations and Experiences documents hundreds of digital installations from the last decade. It includes work of agencies, research labs and independent artists working in the field of interaction design and new media art.

Of all the projects, two stood out for me. The first is Big Screams, a participatory game for mobile devices created by Canadian artist-programmer Elie Zananiri. The concept is similar to Open Exhibits’ Heist, which relies on communication between mobile phones and a touchtable. Instead of connecting to a private network as in Heist, players of Big Screams dial a phone number to connect. Once connected, an icon identified by their phone number appears on a giant wall projection. Then the madness truly begins: To move your icon across the wall, you scream into your phone’s microphone. The volume of your scream determines how successful you are – scream louder than everyone else to repel their icons and win the game.

I love the spectacle Big Screams produces. Watching the game is nearly as engaging (and deafening!) as playing. In busy public spaces like the British Museum’s Great Court it is impossible to involve everyone, so activities that provide an enjoyable experience for passive onlookers are crucial. The ability to play from your own mobile device is also important, and in this case, there are no cross-platform compatibility issues: The voice interface works flawlessly across all types of phones. Heist obviously takes this model a step further allowing users to connect and save information, which has great potential for the way museums work.

Another compelling work is Touched Echo, a sonic intervention in the German city of Dresden created by Markus Kison. Based on the principle that human bones conduct sound, the installation uses engraved plates attached to a metal balustrade to deliver audio content. Users place their elbows on the railing and hands over their ears like headphones to listen to audio clips that simulate the frightening sounds of a World War II air raid. It is a fascinating twist on the traditional audio tour and an inventive way of embedding sounds in the urban landscape.

Watching the video of people interacting with Touched Echo, I appreciate how it is both a personal and shared experience. Although people listen to clips individually using their own bodies as sounding board, social interaction occurs when others see them using it, or when someone explains how it works. The balance between individual and communal experience is a challenging design problem for multiuser applications, but this work gets it right. I could imagine this technique working well in a natural history or medical museum in exhibits that explore the human body. I wonder too, if it would be effective in historic cemeteries or memorials to resurrect the voices of those who have died. This goes far beyond the scope of implementing applications for screens and tables, but it inspired me to think out of the box about multiuser, multitouch exhibits.

Open Exhibits 2.5 - Coming This Fall

This November will mark the second year anniversary of Open Exhibits. Back then, we released version 1.0 of the Open Exhibits multitouch framework and launched the community site. In March of this year, we completely redesigned and rebuilt the website and released version 2.0 of the Open Exhibits SDK (software development kit).

Open Exhibits 2.5 builds on our prior releases, adding new functionality and stability to the current version. (GestureWorks, the commercial counterpart to Open Exhibits will be updated to version 3.5 just prior to the OE release.) Our expanded software team is pushing ahead with a series of new features for this major release.

New Components

Several new Open Exhibits Components will become available with version 2.5. These include: Album Viewer, Flickr Viewer, Live Video Viewer, Wav Player and YouTube Player. In addition, the Map Viewer will be released, however due to changes in the Google Maps API key policy, the Map Viewer will use Modest Maps.

These components can be altered or combined by ActionScript developers to create new multitouch applications. They can also be customized by non-programmers by editing the Creative Markup Language XML (text) files that come with each component. This will make it even easier for author/editors to add media, change text descriptions, styles and basic layouts.

New UI Elements

Another major feature is the inclusion of new UI Elements. These small functional user-interface elements make it easier for developers to customize and extend multitouch applications. The UI elements include: onscreen keyboard, magnifier, date picker, color picker, orb menu, dials, switches, and text input box. All of these elements are customizable and multitouch enabled.

Gesture Processing, Gesture Support and Improvements to the SDK

A number of improvements to the gesture engine and other features found within the Open Exhibits SDK (GestureWorks SDK) will be released with the new version. These include:

- Improved gesture support

- More efficient gesture processing

- Support for custom gesture event naming

- Improved built-in physics engine

- Added gestures including improved tap and hold gestures

- New Creative Markup Language (CML) stand-alone player

- New layout algorithms

- Powerful template-render kit

- New example and tutorial files and resources

We will elaborate on all of these new features, as we get closer to releasing Open Exhibits 2.5. We plan on announcing the release date in a couple of weeks. Stay tuned, there is much more to come.

London to New Mexico: Multitouch for the British Museum

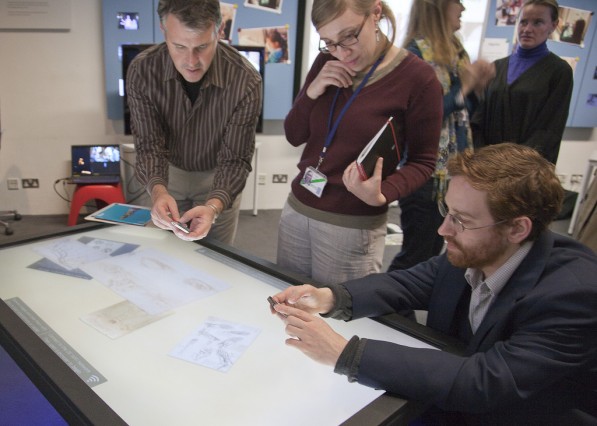

Leaving London Olympic madness behind, I have landed in New Mexico for a month-long fellowship with Open Exhibits. It has been a long road from the multitouch seminar held at The British Museum in October 2011. That event brought an Ideum multitouch table running Open Exhibits software to the Samsung Digital Discovery Centre, the museum’s ICT learning space. Museum staff were invited to explore the table and talk about how it might be used in gallery-based interpretation and learning. We also had a demonstrations of Augmented Reality, RFID, and a gesture-controlled gigapixel viewer by Open Exhibits developer Samuel Cox.

Like many heritage institutions, the British Museum has taken a conservative approach to gallery technology. Last year’s seminar was, for many staff members, their first encounter with multitouch and gesture exhibits. Staff from across the museum (curatorial, interpretation, education, IT, exhibitions, facilities) explored how these technologies could be integrated into temporary exhibitions and permanent galleries. The two-day event culminated in a discussion with Curator Jill Cook about digital media in the upcoming Ice Age Art exhibition.

I respect those concerns: technology is not an end in itself. It is simply another tool in our arsenal of interpretive techniques to help visitors better appreciate our collections. It should complement and support rather than distract. Over the next few weeks with Open Exhibits, I hope to gain a clearer understanding of how multitouch and gesture in particular can help facilitate these supportive interactions with objects. During the next year, these technologies will be the strategic focus of the Samsung Centre. We will exploit the flexible, facilitated setting of education programs to try out these technologies. Hopefully these experiments will yield insights that can be fed back into the wider museum and to the community of education and interpretation professionals looking for guidance into how to best employ multitouch technologies.

I have an idea for a multitouch exhibit which we hope to build during the next few weeks. I will blog about the process. I am excited about the potential of Open Exhibits to provide our team with an easy (and free) platform to develop these experimental applications on our own. Watch this space.

Research Update: Institutional Review Board (IRB) Submission

The Open Exhibits IRB application is officially under review at the University of New Mexico Human Research Protections Office! As soon as the research is officially approved, we can start collecting data.

The research plan was submitted for Expedited New Study review, so if all goes well we should be able to collect data in a few weeks.

IRBs are committees established under federal law to ensure the ethical treatment of human research subjects (http://www.hhs.gov/ohrp/).